ChatGPT Security: Discovering and Securing AI Tools

securityboulevard.com – 2023-06-17 01:33:47 – Source link

Let’s talk about the darker side of the ChatGPT security story: a recent DarkReading report found that 4% of workers are leaking protected corporate information into AI tools by feeding schematics, statistics, instructions, and other intellectual property into large language learning models (LLMs). ChatGPT security took center stage in April 2023 when Samsung employees leaked intellectual property into ChatGPT (including both confidential product information and meeting notes), leading to its ban by the company on May 2nd, 2023. Such risks are leading more and more organizations (such as Apple) to try to block these sites. As the number of generative AI and LLM tools and companies grows, the problem of ChatGPT security becomes more challenging.

ChatGPT Security is Simpler Than You Think

Of course, these AI systems can facilitate research and development efforts by simulating and generating ideas, designs, and prototypes, expediting innovation cycles. Unfortunately, they also create a wide range of security issues for companies because of the behaviors noted above, in addition to attackers searching LLMs for carelessly shared company data. (Side note: have you opted out of sharing your company and personal data with ChatGPT?)

There is a profound danger in reactive security without strategy, and much opportunity for overcorrection. Some of the solutions for ChatGPT security include blocking access by directing all traffic over a VPN, and then using an outbound security stack to inspect traffic. Eventually, though, employees find new ways to get around some of these blocks, or hunt for other tools that aren’t blocked. And drastic measures like the ones Samsung and Apple have taken leave security gaps of their own. Blocking AI tools completely from your organization isn’t necessary if you have the right security tools.

Discovering AI tools

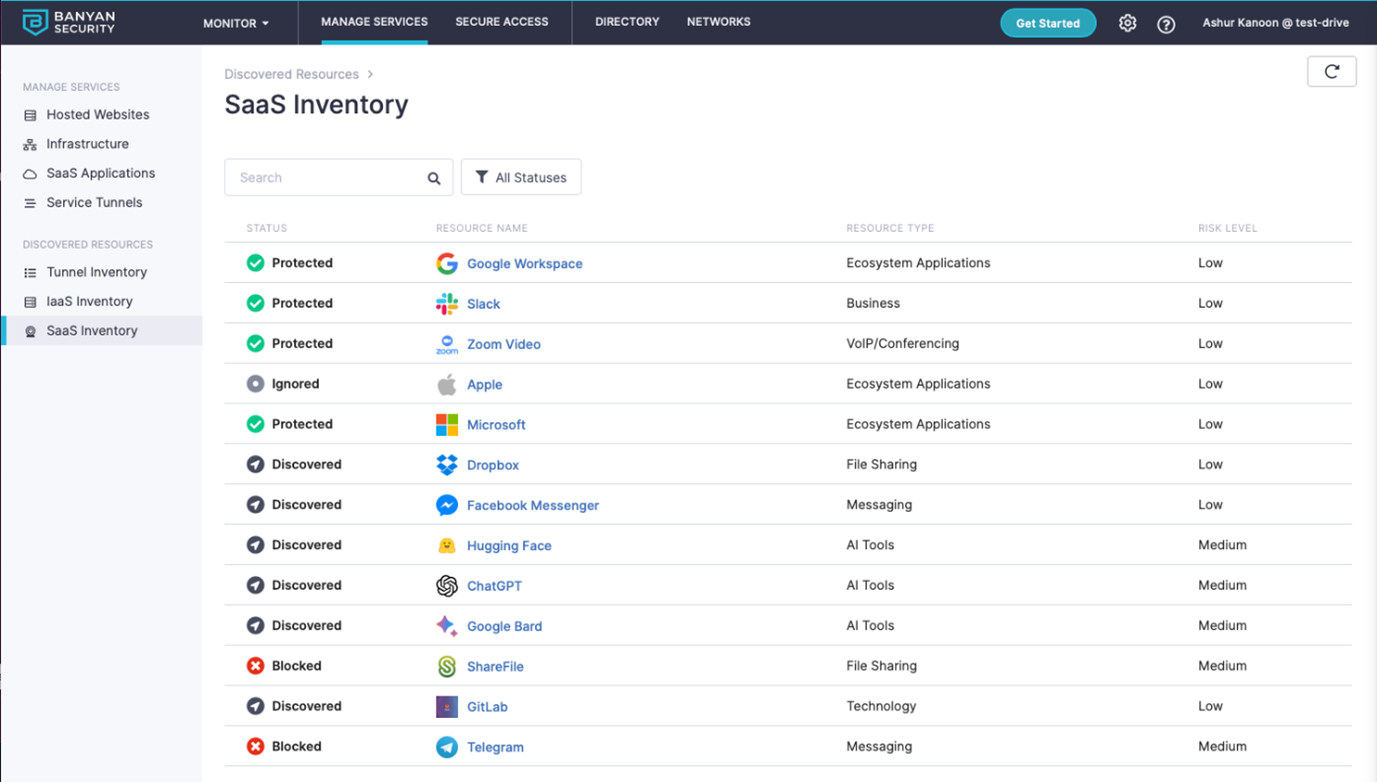

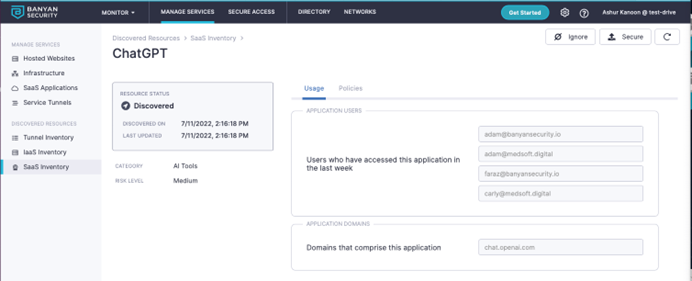

Discovery is important and a crucial step to combating data exfiltration. Security for AI should be able to detect quickly, then categorize accurately where the data is going. With new AI tools popping up daily, this isn’t always so easy. Banyan’s solution looks at all DNS transactions and its real-time categorization engine assesses a range of information. Our security for AI also inspects traffic for sensitive data, such as PII, PHI, Secrets and Keys, PCI, using a modern cloud-based Data Loss Prevention (DLP) engine. As you can see below, the administrator sees where users are going, when, from what device this traffic originates, as well as what type of data is being sent:

It is worth noting that our solution is always-on, so end users will benefit from the protection without having to do anything. Administrators also gain visibility without needing to configure anything extra, or have their users do the same. As soon as the first user visits the first website, the administrator gets actionable insights. These are presented as applications and categories: much easier to use and create policies for those rather than just configuring policies around domains.

A single SaaS application can have hundreds or thousands of domains, so being able to quickly find a SaaS application (and how it’s been used) is the first step to creating a comprehensive policy:

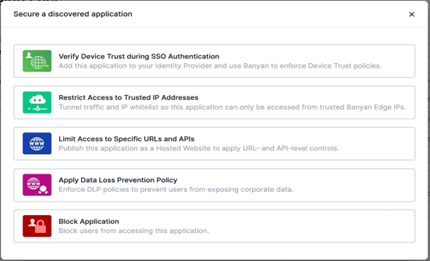

Once you’ve Discovered Resources, you have options on what to do next. The most restrictive option: to completely block these types of sites (along with new domains and proxies) that may be used to circumvent blocks. Less restrictive options including proxying or tunneling the traffic to be able to further inspect or enable URL filtering.

As you can see, the end user is made aware of why access was denied, and is not being blackholed, which may lead to a call to IT’s Helpdesk and degraded productivity:

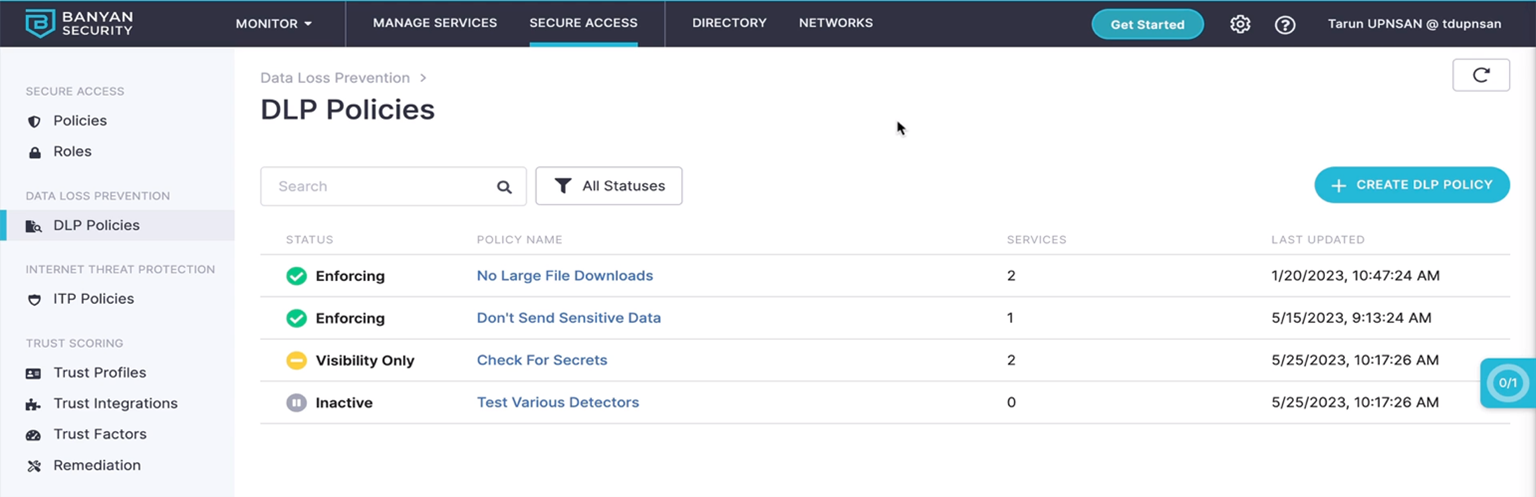

The administrator also has the option to apply a Data Loss Prevention (DLP) policy. The policies may include blocking downloads or restricting sensitive data uploads, as shown here:

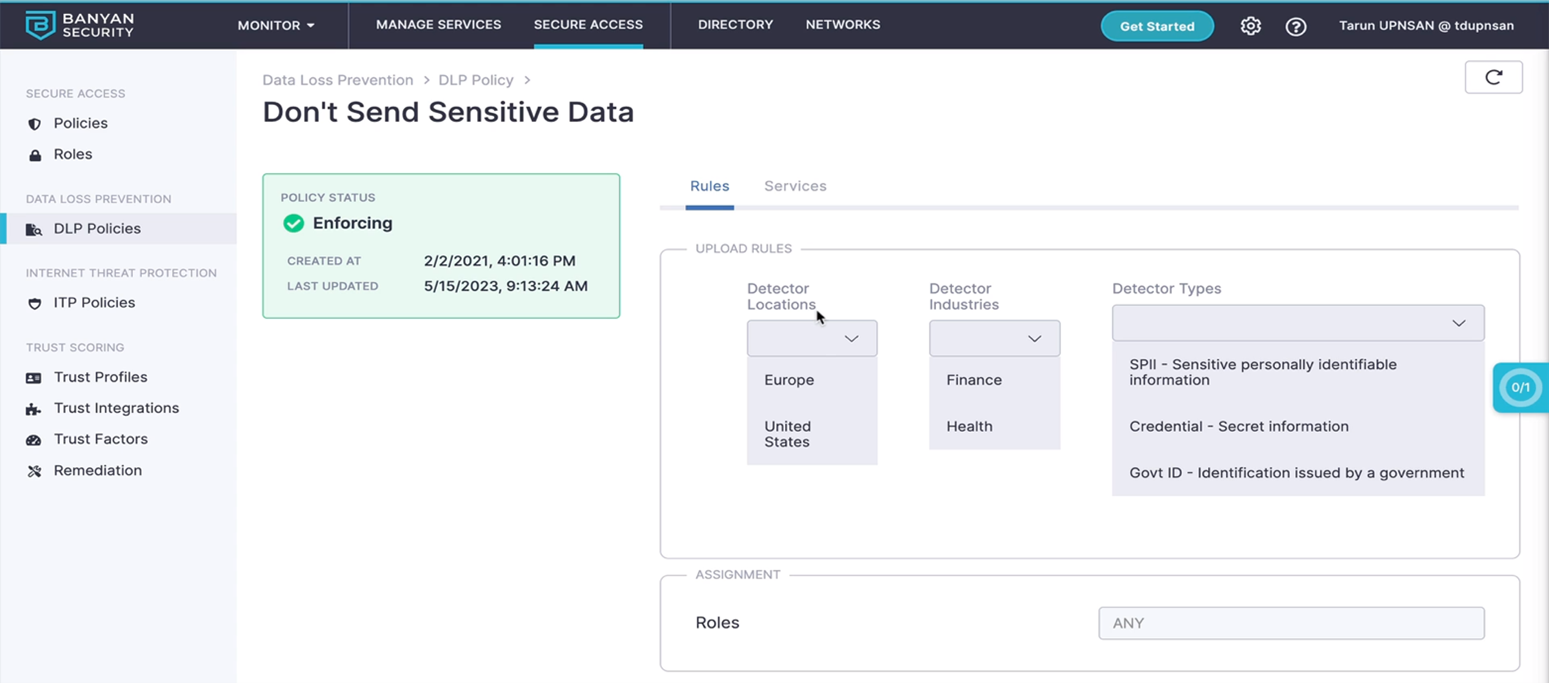

Sensitive data inspection is based on known patterns across multiple regions and countries:

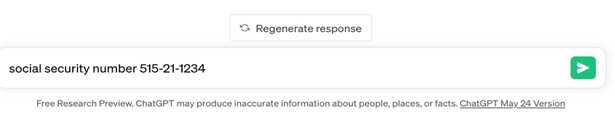

In this example, a user tries uploading a social security number to ChatGPT. All other non-sensitive information interactions with ChatGPT, and other AI tools, are allowed:

The end user is notified that the specific action is not allowed, and the interaction is blocked.

Banyan ChatGPT Security

Generative AI introduces new cybersecurity threats by enabling the creation of highly sophisticated and realistic phishing attacks, capable of tricking even the most vigilant users. Additionally, malicious actors can leverage generative AI to automate the creation of advanced malware, making it harder for traditional security solutions to detect and mitigate these evolving threats. Employees are also leaking valuable corporate intellectual property in the hopes of getting work done quickly and easily. Effective security for AI must effectively address all of these facets.

In closing, focus on solutions that give the ability to block access to generative AI sites and tools effectively. By leveraging advanced web filtering capabilities and DLP inspection, SWGs like the Banyan SWG can detect and prevent users from accessing websites or tools specifically designed for generative AI. These solutions analyze and categorize web content based on predefined policies, allowing administrators to create rules that identify, then block sites related to generative AI. SWGs employ a combination of URL filtering, content inspection, and machine learning algorithms to accurately identify and categorize websites and tools associated with generative AI.

By blocking access to these resources, organizations can mitigate potential risks and prevent unauthorized or inappropriate use of generative AI technologies within their networks. SWGs provide a robust defense against potential security threats, ensuring that employees are unable to access generative AI sites or tools that may compromise data integrity, violate privacy regulations, or infringe upon intellectual property rights. In summary, SWGs offer an effective solution to block access to generative AI sites and tools, helping organizations maintain control and security over their network environments.

Learn more about ChatGPT security through Banyan SSE by scheduling a custom demo today.

The post ChatGPT Security: Discovering and Securing AI Tools first appeared on Banyan Security.

*** This is a Security Bloggers Network syndicated blog from Banyan Security authored by Ashur Kanoon. Read the original post at: https://www.banyansecurity.io/chatgpt-security-for-ai/?utm_source=rss&utm_medium=rss&utm_campaign=chatgpt-security-for-ai